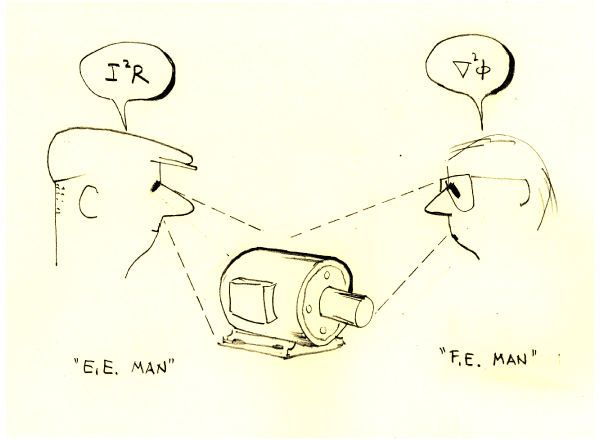

Fig. 1

Atomic simulation 1 — Imagine the simulation of a system or an electric machine atom by atom, indivisible particle by indivisible particle, in which there is one discrete finite element for each and every atom. There is a mathematical model (a system of differential equations and constitutive relations) for each and every atom; and there is, in addition, a supervisory system of equations and conditions which coordinates the behaviour and the activities of all the atoms in such a way that the whole set of simulation equations models the entire system. Atom by atom, in every physical mode of activity: thermal, mechanical, electromagnetic, and even chemical.

One can imagine that Mother Nature has not only devised and constructed such a system of “atomic simulation” into every electric motor — indeed into every object in the universe, including every living creature, as well as the universe itself; and by integrating or embedding the simulation equations into the atoms and objects themselves she has made the individual atoms not only autonomous but also able to interact with other atoms (large numbers of them) in a definite way, even though some aspects of the activity may seem chaotic or at least stochastic to our short-sighted eyes. This integration is so complete that the original system of “simulation equations” does not need its own computer, but is solved implicitly by (and indistinguishably from) the activities of the atoms themselves in real time. The system of “simulation equations” thus loses its identity as a simulation, and becomes what we might pathetically call an embedded control system — but without any separate means of executing the algorithms: they are all integrated or embedded in the atoms themselves, and they are observable (or partially observable) to us as the laws of physics, or the laws of nature.

Now let’s take these ideas into the lab. where we are testing a prototype electric motor. It’s clear that we cannot measure or even observe the activities of every atom in that motor. In fact the number of measurements we can make is several orders of magnitude smaller than the number of numbers we would need to characterize the activity of every atom. In other words, even if we had a computer big enough to simulate every atom in all its contortions, we would not be able to test the results in complete detail. We will always be limited by our instrumentation — that is, by the number and accuracy of the sensors we can apply to the motor. Even if we applied a hundred sensors, or a thousand, it would be nowhere near enough to observe the activity of every atom, or even every element in a finite-element simulation.

Would it matter, even if we could do it? What indeed is the point of pursuing “total simulation” to the level of simulating every atom? Clearly there is no point. Indeed the question is academic if not fatuous, and we will gain more from a practical question: At what level of mesh refinement should we stop, and decide that the mesh is fine enough?

In the early days of numerical analysis, the mesh was never fine enough, because of the computing limitations; we did not have the luxury of deciding to use a coarser mesh to speed things up, because we didn’t have fine meshes to start with. And here I would like to make a diversion. Because of these limitations the methods of numerical analysis were sometimes ignored or rejected by engineers who preferred to rely on their classical procedures; computers were scarce and slow, and required new skills. But some of them (including myself), impatient for results, didn’t realise that it was the dawn of a new era. This is in spite of the fact that the history of engineering is rich in examples of the development of new theory and new calculating procedures, going all the way back to Pythagoras and almost certainly even earlier. 2 Within living memory, engineers have strained to get better methods, with electrolytic tanks and Teledeltos™ paper 3, conformal mapping, and goodness know what else, and now that we have extremely powerful simulation tools, these old methods are largely forgotten.

Getting back to mesh refinement, one of the ways to test it is to refine it progressively in stages, to see how much difference it makes in the results of interest. By the standards of the early days (the 1970s), it’s an extravagant luxury to be able to do this.

Once we have a mesh that is deemed to be fine enough, measurements in the lab. can come to be regarded as sampled data. We can choose a few “points” at which to attach or connect sensors, transducers, etc.; and then we will compare their readings with the simulation. In doing this, we are sampling the simulation data, at a limited number of points or instants. Nowadays a typical simulation — even a small one — will have many more nodes and elements than the number of physical test points or sensors. So the “sampling interval” is always going to be coarse. Transducers of all kinds generally do not measure point quantities, but integrals or averages taken over finite volumes that may contain a great number of finite-elements in the numerical simulation. If we are meticulous in reviewing the simulation data we will find details (for example, hot-spots) that may easily be missed (and often are missed) in test data taken in the lab., either because the measurement transducer was too big, or in the wrong place.

We have such faith in our simulation data that it is easy to forget about testing altogether. But there are several reasons why that would be a mistake. Since we have already convinced ourselves that we cannot test or observe every atom or even every element, it follows that data representing domains between or beyond the physical test points are interpolated or extrapolated. In other words, we are putting our faith in the simulation at all points where we cannot physically test it. Faith? Yes, faith. Faith in what we call the laws of physics; faith in mathematics; faith in algorithms; faith in software. Where does faith stop, and certainty begin? At the test points!

We take all this for granted; but there is another reason why testing is so important. Simulation per se does not guarantee that what is being simulated is the same entity (in all its attributes) as the actual physical object of interest (the motor). There may be differences in detail: the number of turns in the winding, the wire diameter, the magnetic properties of the steel, to name a few. Simulation results per se do not record such discrepancies: they only summarise the result of crunching whatever numbers were fed into the hopper. Only test will tell.

Let’s get some perspective. It is often assumed that bigger and faster simulation with finer and finer meshes will give better and better results, even though the results will always need to be tested, and even though there are separate questions of cost. 4 In this context the extraordinary development of simulation technology has changed our perception of the role and nature of classical theory. Previously, in the “dark ages” before computers, the classical theory was developed through a combination of human imagination, logic, and mathematical analysis applied to physical observations and measurements, and even beyond that to objects and mechanisms that were yet to be invented; (so the process involved inspiration and perspiration as well, to borrow from Edison’s famous statement about “genius”). 5 The classical theory gave us an intellectual framework or structure for understanding and designing everything from steam engines to semiconductors, and it gave us formulas which we could use to put numbers to our ideas and measurements. 6 Indeed it gave us more than formulas: it gave us calculating procedures which we can find in abundance in any engineering textbook and in many classical papers. Nowadays these procedures are programmed in software, permitting them to be improved, accelerated, and even replaced by better ones; and this evolution has progressed so far that classical procedures are no longer executed by hand-and-slide-rule (or very rarely). Many of the formulas, however, and particularly the simpler ones, are still widely used for all sorts of daily tasks for which computer simulation would generally be unsuitable: quick calculations, sanity checks, etc. This means that the classical procedures — at least in the form in which they were once calculated by hand-and-slide-rule — are liable to become obsolete, archaic, and forgotten. They never were all that accurate, anyway, by the standards we now expect from simulation technology. It’s quite possible that this evolution (or revolution) is almost complete.

But what cannot and must not be forgotten is the intellectual structure of the classical theory: the framework of our knowledge and understanding. If we let that go, along with the old manual methods of calculation, we would be “throwing the baby out with the bathwater” — not a bad analogy, as babies have a bright future whereas bathwater does not. The intellectual structure of the classical theory does not reside in a simulation process per se. A simulation process can be likened to a test programme in the lab. It produces similar results, in the form of a pile of data. Depending on the level of organization in the process, this pile of data may have very little structure, or a structure that cannot be easily understood. There is no inherent physically meaningful structure in the massed results of the solution of thousands of differential equations or the collection of thousands of thermocouple readings. Structure comes from the organization of that data, either before or after the process is executed: and for that organization we need the classical theory.

Let’s take this one last step further. Imagine we had no classical theory. We have computers — big ones, really big ones — but no theory of electric machines, no theory of windings, no dq-axis transformation, no Ohm’s law. What will happen? We will soon end up with mountains of data, and we (or at least someone) will feel the need to make sense of it. This is perhaps analogous to the situation experienced by Faraday, Oersted, Ampère, Gauss, and many others including engineers such as Blondel, Steinmetz, Dolivo-Dobrovolsky, Ferraris, and countless others, all of whom evolved theory from large amounts of experimental data and observations. It must have been the case that before their theories were formulated, the degree of structure in the data was lacking; that’s why they were so hungry for the theory that would explain it all. Without the theory, we would need to sift through all our simulation data, all our test data, and try to figure out the patterns and the rules and the laws of physics and engineering. If we were lucky, we might end up with ideas similar to those of the above-mentioned (at least, the successful ones among them). This would have to be called “re-inventing the wheel”. Ego requiem mea causa. I rest my case, the case for classical theory.

Notes

1 Oxford English Dictionary — “Atomism [1678]: Atomic philosophy; the doctrine of the formation of all things from indivisible particles endued with gravity and motion”. This dictionary entry should help to avoid giving the impression that we are discussing atomic fission or fusion (both of which are much more recent concepts). For U.S. readers, Webster’s Dictionary will be found to give similar explanations.

2 See Morris Kline, Mathematical Thought from Ancient to Modern Times, Oxford University Press, 1972

3 See https://en.wikipedia.org/wiki/Teledeltos. You can still buy it.

4 The assumption that “bigger=more accurate” is an assumption, not a proven fact in all instances.

5 See https://en.wikiquote.org/wiki/Thomas_Edison

6 See https://www.azquotes.com/author/7873 Lord_Kelvin